It's that time of year again when many people are deciding which college they should attend come fall. Whether they are a high school senior, aspiring professional, or seasoned veteran seeking an MBA, the ultimate decision of which college is based largely on reputation.

It's that time of year again when many people are deciding which college they should attend come fall. Whether they are a high school senior, aspiring professional, or seasoned veteran seeking an MBA, the ultimate decision of which college is based largely on reputation.The perennial data source for college ratings that most students turn to is the US News America's Best Colleges Rankings. Despite the limited usefulness of reading rankings, there is only so much information you can get from first-hand experience. Taking the campus tour, talking with students and doing your own due diligence is about as far as you can go short of attending the school. The US News ranking methodology is based on many factors: peer assessment, faculty resources, selectivity, class sizes, and financial aid, among many others. What does it really mean to rank a list of schools? I can define a personal value function for determining which school I prefer to and by doing pairwise comparisons, I can sort a list of schools based on which would be the most beneficial to my personal goals. However, you can imagine though that an integration of these value functions over a large sample of students would pretty quickly create a ranking distribution that was rather flat. While the US News Rankings strive to give a complete overall view of the school, there are few weaknesses to its method. Some of these weaknesses have stirred criticism for the rankings by both students and colleges.

First, many of the metrics are subjective, such as peer assessment and selectively. This allows for "smoothing out" of the rankings to what the editors expect by "reinterpreting" the selectivity.

My major problem with the US News rankings, however, is that they are not free. In fact, only the top few schools rankings are viewable. Too see the whole list you have to pay $14.95.

So, to this end, I've decided to try my hand at generating my own rankings. Since I'm no expert in the field of evaluating colleges, I'm going to cheat and use statistical learning techniques. I'm going to do this with the help of just Google and some open source software. You won't even have to pay $14.95 to see the results!

First off, I found a list of American Universities from the Open Directory. I parsed out this page with a quick hand-written wrapper to get the names and URLs. Now, the fun part. What kind of "features" should I use for evaluating each school? This is where a bit of editorial control comes to bear. I wanted to capture the essence of what the US News methodology used, but I wanted to do this in a completely automated way using Google. So for each feature, I defined a Google Search query (shown in brackets) that would give me a rough approximation of that particular attribute:

- Peer assessment [link:www.stanford.edu] - This is how some search engines approximate "peer assessment", by counting the number of other pages citing you

- Size [site:www.stanford.edu] - a larger school would have a larger web, right? =)

- Number of faculty [dr. "home page" site:www.stanford.edu] - hopefully those professors have websites that mention "dr." and "home page"

- Scholarly Publications["Stanford University" in scholar.google.com]

- News mentions ["Stanford University" in news.google.com]

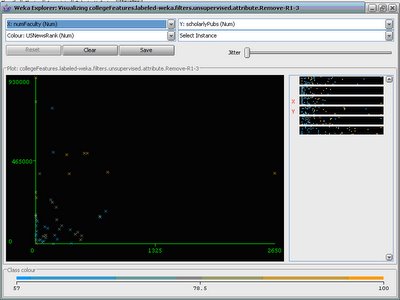

First, I load the data into WEKA, a free, open source data mining software package in Java. It implements many off-the-shelf classification and regression algorithms with an API and GUI interface. Let's take a look at few slices of the data:

This figure plots newsMentions on the x-axis against scholarlyPublications on the y-axis. The points that are plotted are those 50 schools that have a score in the US News rankings (schools beyond the 50th place don't have an overall score in the US News rankings). The color of the dots goes from low(blue) to high(orange). The color trend is blue in the lower-left to orange in the upper-right. As you can see, not only are the two Google queries correllated, they seem to also be jointly correlated to the US News score.

This figure plots newsMentions on the x-axis against scholarlyPublications on the y-axis. The points that are plotted are those 50 schools that have a score in the US News rankings (schools beyond the 50th place don't have an overall score in the US News rankings). The color of the dots goes from low(blue) to high(orange). The color trend is blue in the lower-left to orange in the upper-right. As you can see, not only are the two Google queries correllated, they seem to also be jointly correlated to the US News score. Plotting numberFaculty against scholarlyPublications shows also a positive correlation. So maybe these queries weren't totally bogus and have some informational content.

Plotting numberFaculty against scholarlyPublications shows also a positive correlation. So maybe these queries weren't totally bogus and have some informational content.The next step is to fit a statistical model to the training data. The basic idea is to train the model on the 50 colleges with USNews scores and to test the model on all 1700+ American colleges. The natural first step is to try to fit a 5-dimensional line through the space. The best fit line is

USNewsRank = (-0.0003)peerAssessment+(0)sizeWeb+(0.0063)numFaculty+(0)scholarlyPubs +This simple model has a root mean squared error(RMSE) of 10.4223. So, in the linear model the size of the web and number of scholarly Publications don't play a role. Fair enough, but can we do better?

0.0002 * newsMentions+68.7534.

The answer is yes. I next used a support vector machine model with a quadratic kernel function. This gave me a RMSE of 7.2724 on the training data. The quadratic kernel allows for more complex dependencies in the training data to be modelled, which is why the training error is lower, but would this result in better evaluation on the larger data set? There is no quick answer to this, but we can see from the ouput what the model predicted.

Name USNews SVM University of Washington 57.4 98.929 Yale University 98 98.081 Harvard University 98 97.953 Massachusetts Institute of Technology 93 92.996 Stanford University 93 92.922 National University 92.523 Columbia University 86 92.255 Princeton University 100 90.609 New York University 65 85.271 University of Chicago 85 85.052 Indiana University 83.973 University of Pennsylvania 93 83.91 Duke University 93 79.487 University of Southern California 66 78.645 University of Pittsburgh 78.274 Cornell University 84 78.051 University of Florida 77.864 University of Colorado 76.877 The American College 76.597 University of California, Berkeley 78 76.192

This table shows the top 20 scores given by my program, along side the US News rating, when available(i.e. when the school was in the top 50). As you can see, many schools recieved consistent marks across the two ratings. However, there are quite a few surprises. My program ranked University of Washington as the best school, where it was only ranked 57.4 by US News. Having visited UDub myself while I was working at Microsoft, I'm not completely surprised. It's a truly modern university that has recently been producing lots of good work--but let's not overgeneralize. I believe that "National University" being high in my rankings is a flaw in the methodology.There are probably many spurious matches to that query like, "Korea National University". Ditto for, "The American College". They just had fortunate names. However, I think the scores for other schools that were unranked by US News like University of Pittsburgh and Indiana University are legitimate.

Now, is this a "good" ranking?It's hard to say since there is no magic gold standard to compare it too. That is the problem with many machine learning experimental designs. But without squabbling too much over the details, I think this quick test shows that even some very basic statistics derived from Google queries can be rich enough in information that they can be used to answer loosely-defined questions. Although I don't think my program is going to have high school guidance counselers worried about their jobs anytime soon, it does do a decent job. An advantage of this approach is that it can be personalized with a few training examples to rank all of the schools based on your own preferences.

Extracting useful knowledge by applying statistics to summary data(page counts) is one thing, but I've taken it to the next level by actually analyzing the stuff within the HTML page. The result of that work is a project called Diffbot, and you can check it out here.

If you're interested, take a look at the full ranking of all 1720 schools(in zipped CSV format).

Now, is this a "good" ranking?It's hard to say since there is no magic gold standard to compare it too. That is the problem with many machine learning experimental designs. But without squabbling too much over the details, I think this quick test shows that even some very basic statistics derived from Google queries can be rich enough in information that they can be used to answer loosely-defined questions. Although I don't think my program is going to have high school guidance counselers worried about their jobs anytime soon, it does do a decent job. An advantage of this approach is that it can be personalized with a few training examples to rank all of the schools based on your own preferences.

Extracting useful knowledge by applying statistics to summary data(page counts) is one thing, but I've taken it to the next level by actually analyzing the stuff within the HTML page. The result of that work is a project called Diffbot, and you can check it out here.

If you're interested, take a look at the full ranking of all 1720 schools(in zipped CSV format).

36 comments:

dugg

Interesting technique. This is definitely the way many of the projects based on Google API should be moving towards.

Cool, but the overall college rankings are pretty meaningless. Can you post how yur program would rank specific fields like engineering/law/med school?

The rankings look more trustworthy than US News'. Nice to see my college up there ;)

"University of Washington" and "Washington University" are different schools--your post implies the latter (Wash U is that school's nickname) but I wonder if the algorithm falsely boosted that schools performance by aggregating results from both schools (and the umpteen other schools with almost identical names).

Good point. To be clear, I was referring to the first one, in Seattle (I think the right nickname is UDub). There is a possbility of false boosting, but I think since I do exact phrase search, "Washington University" and "University of Washington" would not have cross-matched. Correct me if I'm wrong.

Nicely done. However, in looking near the bottom of the rankings, there are some interesting - and I would say anomolous - results. Washington University in Saint Louis, University of Texas at Austin, University of Michigan-Ann Arbor, University of Illinois at Urbana-Champaign, Boston College, and The Pennsylvania State University certainly are not among the bottom 20 of the universities you've surveyed. At a first glance, it appears that their weakness came due to limited numbers of faculty web sites and poor showings in the news.

Is there any other underlying reasons you see for these schools' surprising underperformance in your evaluation? Is this a reflection of a failure by the universities, or is it inherent to the methodology?

In the interest of full disclosure, I am a Penn State student, so I was pretty disappointed to find PSU all the way down the list.

,

woodfourth,

I agree with you that the rankings near the bottom of the list are less trustworthy. The reason for this though, in my opinion, has less to do with any underperformance of Penn State or any weakness in the methodology than it does with a flaw with the training data. See, the problem is that US News only provides the rankings for schools that made it to the TOP of the list, instead of a random sample of the list.

Therefore a statistical method which is trained from that top 50 list will operate well in that regime, but will be inaccurate when it's forced to extrapolate data points that lie furthur away.

BU's canonical URL is http://www.bu.edu/, not web.bu.edu. Don't know if/how that would influence its ranking.

As fascinating as this is, it simply proves how inadequate most ranking systems are. Your chief mistake was in using criteria that US News uses: they are almost completely meaningless and invite misrepresentation. Using Google Scholar is another major mistake, as the vast majority of scholarly papers are inaccessible to that indexing system. God knows what a faculty member is anymore - definition? The real creativity will be when someone uses available data sets that haven't been used in new ways, enabling new analyses, and focusing on human measures, not machine-generated statistics (such as web search figures).

Scholarly pubs drop out of the linear model because numFaculty is already included.

More faculty (usually) means more publications. If you tried including scholarlyPubs/numFaculty, I bet that A) this new variable would be included and B) the RMSE would be better. It might explain U. Pitt and Indiana University as well; both of those universities are respectable; but they're also quite large.

Incidentally, you can determine whether the SVM is really better than the linear function, using leave-out-one-cross-validation. Word.

Also, as to the comment by Paul Wiener that the vast majority of scholarly papers are inaccessible to Google Scholar. Are you sure about that? It was true 6-9 months ago, but I tried it again last month, and found it more exhaustive than any of the proprietary databases that my university library has in any of the fields I work in. It found papers in obscure third-tier conference proceedings, and in a variety of minor journals I'd never heard of... as well as in top journals and conferences. (Although this does raise a concern w/ just counting publications -- a publication at a minor third-tier journal probably shouldn't count equally with a publication in Nature)...

I don't see the point of this. The data gathered is clearly bad. Harvard has one faculty who published 930000 papers; many schools have 0 faculty. I do't know how google scholar was used, but I do know that the number of papers I published is less than half the number reported by google scholar.

newsMentions seems like a faulty parameter. A search for Stanford in news.google.com will turn up multiple hits for the same news article. Besides news articles could be and most often is about anything, labour disputes, new campus laws, sporting events etc. etc. Factors that shouldnt affect the ranking.

The data plots appear to show increasing variance suggesting a transformation (at least for the linear model). That may be why the quardratic fits better. Linear models are easier to interpret and a transformation or two may make it as useful. As far as comments on limitations, it seems to me that the $14.95 version also has these limitations, and yours is free. After all, you weren't suggesting that people base their choice solely on your ranking.

wow, looks like my alma mater did horrendous...did I over pay for my education???

Yeah... great rankings. If you are a retard! On the Michigan faculty directory, over 3000 of the faculty have websites, not the 263 as stated. On a side note, www.umich.edu was the number one web site on the internet in 1995. You can take these rankings and shove them. Stop wasting my and everyone else's time!

Where's the University of Virginia? (not even in the full data)

Isn't one of the flaws here that you are basing some of these measures at least (e.g. Size, faculty) on doing a search against only the top-level domain for each school? This ends up varying greatly based on how schools manage their overall Web infrastrucutre (i.e. many decentralized Web servers with different domains versus a more centralized approach on a common domain.)

Just wanted to comment on Pitt. U.Pitt is actually not a big school compared to Penn State or Ohio State. But, they do have a big medical school and hospital. Perhaps that is why the ranking is so high. They are doing some good things in the biomedical research. For one thing, they rank top ten in NIH funding.

Interesting work ... at about the same time I constructed a different international league table using Google, which I call the G-Factor.

The G-Factor is simply the number of links to a university's website from other university websites, so it is a crude kind of peer review assessment.

In January 2006 I ran some 90,000 Google queries to gather the data (actually it was more like a million queries, because it took several goes to iron out various kinks in the script). Each full run takes a coupl of days - simply to slow down the ping rate enough that Google doesn't block them (and of course good netizenry!).

My results for the top international 300 universities are linked here.

Personally I think this type of approach is far superior to the existing ranking (SJTU, THES, US News etc) because they are data-driven - and the data that drives them is hard to game or fake.

Please have a look at my results too and let me know what you think!

Peter H

I really commend your approach. The scientific and independent approach of this project is way much better than that of US News. It is very unfortunate that some people whose schools are not in the list become very petty. I guess it speaks a lot about what 'quality' education imprints on them. Again, objective rankings like this is the way to go!

Best regards from NY!

» »

Check also the similar University web popularity rankings published by

4 International Colleges & Universities

It's all good, but I would like to see you list universities of other countries too, because for example the University of Cape Town can compete with all those institutions, and I give truth to this and I'm not even studying there.

I think its confidence that matters not the colleges ranking.

Regards,

Komail Noori

Web Site Design - SEO Expert

Hello, just dropped in to checkout your blog and also introduce our Grand Opening for **www.annaimports.com**. We offer the ultimate superb selection of handbags, purses, wallet, backpacks, with the highest quality and the absolute lowest prices and that's a promise.

We extend our invitation for you to stop on by and check our website out at : **www.annaimports.Com** Thank you and have a great day!

Great Blog and information well done

I like the technique Link building

to Improve online sales on the Website traffic i think that would be a great idea...

It's a good thing. I use http://www.keylords.com for the keyword selection to get ranked new articles i write and this works just perfect with it.

I get my new articles into the 1st SERP in a couple of hours and they remain there forever. Just make sure you select your keywords.

Honestly, it makes me laugh how people write random stuff and then whine about not being ranked, or being kicked out of SERPs after a while. Sure thing you get what you deserve if you cannot chose the keywords google is hungry for to keep you in high positions. Just use some of your brain to understand how demand and supply works, then find words that are demanded more than supplied using some keyword tool like keylords and you're all set up. Works for me perfectly.

Thank you. Nice blogs.

e

i like this ,

i see also good universities ranking

EduRoute

www.eduroute.info

but i wonder why all ranking is lead to the same result

i like this ,

i see also good universities ranking

EduRoute

www.eduroute.info

but i wonder why all ranking is lead to the same result

i like this ,

i see also good universities ranking

EduRoute

www.eduroute.info

but i wonder why all ranking is lead to the same result

i like this ,

i see also good universities ranking

EduRoute

www.eduroute.info

but i wonder why all ranking is lead to the same result

Post a Comment